Artificial intelligence is claiming its place in the medical technology sector - with enormous potential, but also new regulatory challenges. The EU AI Act (Regulation (EU) 2024/1689) establishes, for the first time, a harmonized legal framework for AI systems. At the same time, cybersecurity requirements are becoming more stringent.Manufacturers who act strategically now will not only ensure compliance, but also gain a significant competitive advantage.Learn more – talk to us today!

Our services

Artificial intelligence (AI) and machine learning (ML) open up a wide range of applications in medical devices. Often, these are health software solutions - classified as medical device software (MDSW) or software as a medical device (SaMD). Increasingly, however, AI/ML technologies are also being integrated into devices and systems with embedded software.We support you throughout the development of a regulatory-compliant, market-ready AI/ML application - holistically, pragmatically, and with a future-proof approach. Whether you are developing a medical device with integrated AI or using AI in regulatory or quality management (QM) processes, we have you covered.Regulatory strategy and classification

Joint assessment of the regulatory framework applicable to your AI/ML application. The goal: a sound and efficient market access strategy aligned with European and international requirements (AI Act, MDR/IVDR, FDA, and others).Requirements analysis and interpretation

Identification of applicable laws, regulations, standards, and guidance documents, and their translation into actionable project steps. Potential references include: AI Act, ISO 13485, ISO/IEC 42001, ISO/IEC 23894, ISO 14971, IEC 62304, IEC 81001-5-1, IEC 82304-1, IEC 60601-1, ISO/IEC 27001, ISO/IEC 27701, IEC TR 80002-1, ISO/IEC 24029, ISO/IEC 23894, or relevant FDA guidance.GAP analysis of your technical documentation

Review of existing documentation for compliance gaps regarding the AI Act, MDR/IVDR, and applicable standards—including actionable recommendations.Creation and optimization of technical documentation

From risk management and clinical evaluation to post-market surveillance: we prepare or enhance your documentation to make it compliant and audit-proof.Adapting your processes for AI/ML

Support with extending your quality management system and adapting your development, software, and data processes to meet the requirements of AI-based systems.Data management for AI

Development of robust, traceable data management strategies for training, validation, and tuning – for a compliant documentation that’s fit for regulatory scrutiny.360° regulatory support

Alignment of product and software lifecycle processes with the specific needs of AI-/ML-based systems.

Preparation and coaching for certification, market authorization, and audits.AI Act mapping and use case analysis

Which parts of the AI Act apply to your product? Which annexes, chapters, and obligations are relevant? We assess and classify your use case in accordance with AI Act requirements.Assurance and oversight of AI systems

Support in demonstrating accuracy, robustness, human oversight, and transparency as required under the AI Act.Evaluation of training and test data

Interpretation and documentation of data sources - traceable and verifiable for notified bodies and authorities.Communication with notified bodies

Assistance with interactions involving notified bodies or regulatory authorities, both within the EU (e.g., notified bodies) and internationally (e.g., FDA).Workshops and in-house training for your team

Training on regulatory requirements and workshops on strategic implementation of AI systems for your development, QM, or regulatory affairs teams.

Let’s get together and explore what you need - free and without obligation.

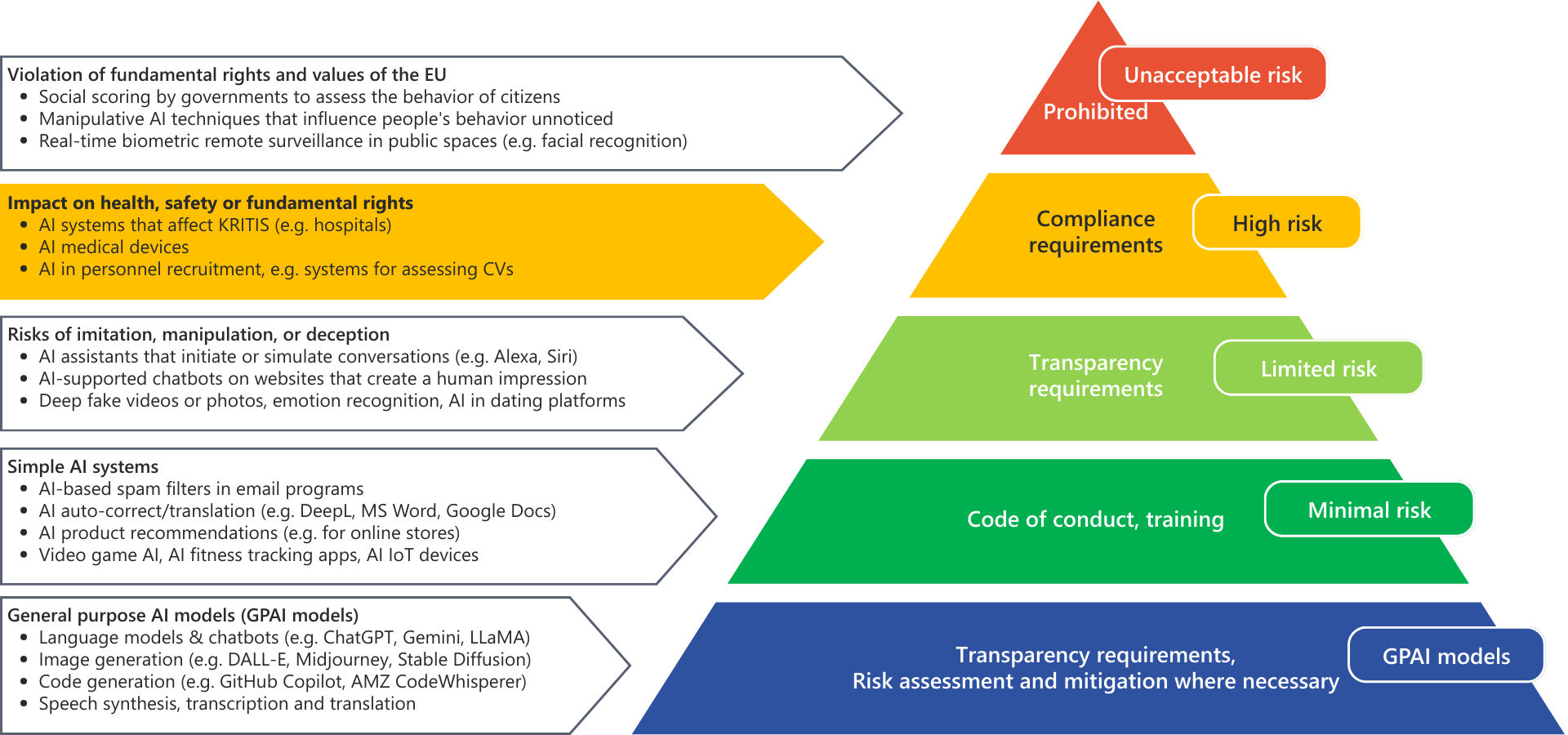

The AI Act: New rules for AI systems

The AI Act establishes, for the first time, a mandatory legal framework for AI within the EU - comparable to the MDR/IVDR. Particularly affected: medical devices with AI components, which are typically classified as high-risk AI systems.

AI Act: What do I need to consider as a manufacturer or provider of medical devices?

- Performance of a specific risk analysis for the AI functionality and classification in accordance with the AI Act

- Demonstration of robustness, accuracy in the context of use, and information security

- Implementation of human oversight measures, based on the explainability of the AI outputs

- Documentation and evaluation of training, tuning, and test datasets

- Compliance with transparency requirements, instructions for use, and traceability of AI results

- Technical documentation, conformity assessment, and registration in accordance with the AI Act

- Logging and storage of logs for compliance with traceability

AI Act: What do I need to consider as an operator of high-risk AI tools?

- Use of the system in accordance with the provider’s instructions for use

- Implementation of human oversight measures, based on the explainability of AI outputs

- Guarantee of quality, relevance, and representativeness of input data

- Fulfillment of monitoring and information obligations

- Compliance with information obligations toward affected individuals

- Logging and storage of logs for compliance with traceability

There is a lot to consider. You can count on us!

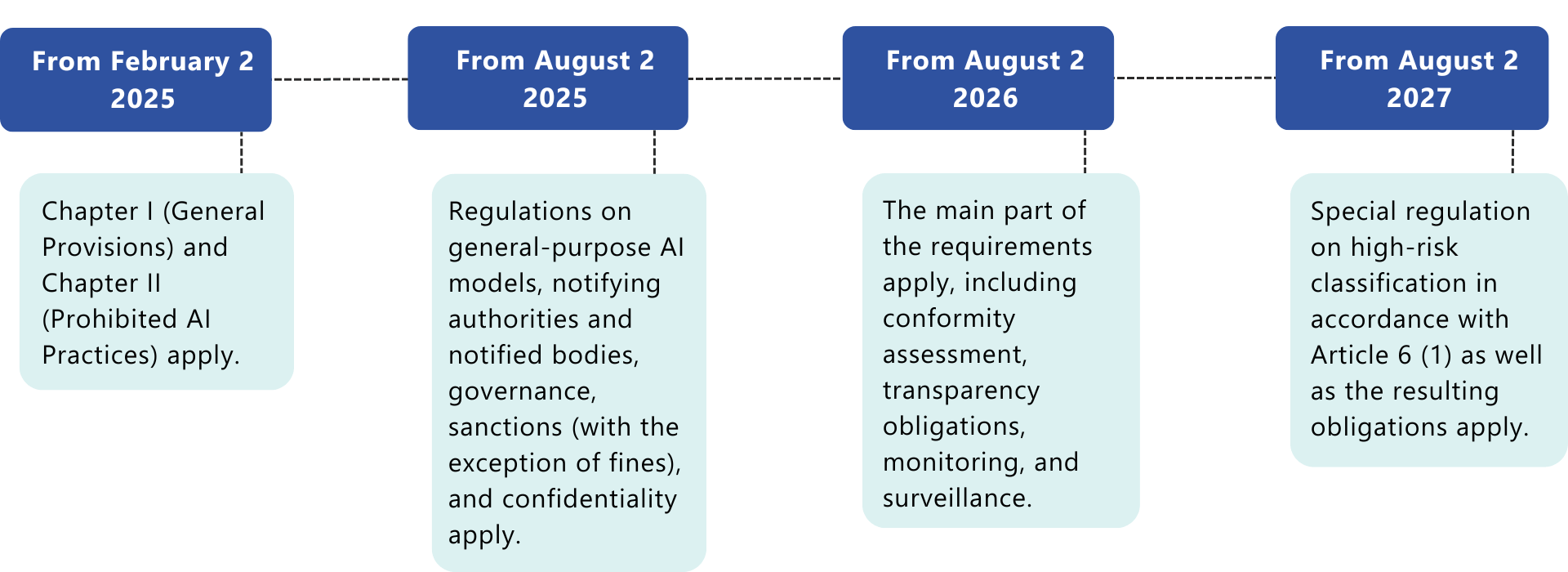

AI Act: Gain an edge - don’t fall behind

The AI Act is not a distant prospect: the transition periods are already running and many requirements overlap with existing regulations such as MDR/IVDR and ISO standards. If you wait today, you risk high costs, a lack of resources, and delays in market approval tomorrow.Now is the time to act – and here are three compelling reasons why:1. Avoid costly rework

Delayed responses to the AI Act often trigger critical feedback from notified bodies, time-consuming remediation, and, in the worst case, halted approval processes. Early GAP analyses help identify and mitigate these risks proactively.2. Enable confident planning for product development

AI functionality is often conceptualized long before it is clear whether it falls under the AI Act. Integrating regulatory requirements early on saves time, cost, and frustration.3. Send a strong signal to the market and regulators

A company that proactively addresses the AI Act demonstrates responsibility, forward-thinking, and professionalism. This builds trust with customers, regulators, and potentially investors.We are here to help.Be strategically prepared, not caught off guard and get clarity on your next steps. Contact us for your personal consultation.

Cybersecurity and AI Act: A must have - especially with AI

Cybersecurity is not a secondary issue under the AI Act - it is a core regulatory requirement. High-risk AI systems must be demonstrably developed and operated to be robust, secure, and resilient against attacks, malfunctions, and tampering. This is particularly critical for medical devices whose results directly influence treatment or diagnosis, both from the patient's perspective and from the authorities' point of view.

Cybersecurity obligations for manufacturers or providers include, among others:

Cybersecurity strategy: This must be fully integrated into the quality management system, with particular attention to MDR/IVDR. This includes the IT security of all third-party software integrated into the AI or used to develop the AI (tool chain).Ensuring data integrity: Training and input data must be protected against tampering, loss, and unauthorized access.Securing decision-making processes: AI-driven decisions must not be vulnerable to external interference or system errors.Monitoring and logging: All system activities must be fully logged and auditable.Early warning systems and corrective mechanisms: Manufacturers must be capable of detecting and correcting undesirable effects promptly.Team NB's position paper (April 2025) emphasizes that cybersecurity in the context of AI goes beyond traditional IT security: the security architecture must be technically resilient and regulatorily compliant - from the outset.We support you in embedding cybersecurity as an integral part of your regulatory strategy - so it doesn’t become a costly afterthought.Let's assess together whether your security architecture meets regulatory expectations.

Software in Production

Complex production processes seem impossible without software in production. However, proof must be established ensuring a risk-minimized operation within QM-relevant processes (ISO 13485).We support you in identifying and evaluating the software correctly as well as in representing the processes within your quality management system appropriately.Our experts are happy to provide their help and advice for all validation activities.Contact us now!

The more complex the device, the higher the requirements of a modern production; the more networked the production, the higher the share of software. Keep control of the increasing complexity with validating your software.

Do you all know them? We know it, too!

ISO 13485:2016 defines the requirements for a quality management system in which a company has to prove that it is in the position to provide medical devices and related services that fulfill the customers’ needs and comply with applicable regulations.The International Society for Pharmaceutical Engineering (ISPE) introduced the risk-based approach to validation of computerized systems in 2008 with the GAMP 5 guideline. The 2nd edition of GAMP 5 was published in 2022.GAMP 5 applies to computer systems used in the medical device and pharmaceutical industries. GAMP 5 explains in great detail how to carry out validation on a risk-based basis.. ISO/TR 80002:2017 applies to any software used in device design, testing, component acceptance, manufacturing, labeling, packaging, distribution, and complaint handling or to automate any other aspect of a medical device quality system as described in ISO 13485.The AAMI TIR36: 2007 is a best practice guide for the "Validation of software for regulated processes". It applies to the same software as ISO/TR 80002-2:2017 and can also be applied to software that creates, adapts or stores electronic records and software that manages electronic signatures, provided they are subject to validation requirements.The scope of AAMI TIR36:2007 is the regulatory requirements of 21 CFR 820 (Quality System Regulation) and 21 CFR 11 (Electronic Records; Electronic Signatures).